After the storm

We shall not cease from exploration And the end of all our exploring Will be to arrive where we started And know the place for the first time. -T...

Senate: 49 Dem | 51 Rep (range: 47-51)

Control: (R+0.4%) from toss-up

Generic polling: R+2.0%

Control R+4.0%

Governor/SoS: NV AZ WI

Supreme Courts: OH NC

(click for more information)

Last month (“Using predictions in the service of ideals and profit,” Sept. 23) I asked what makes a good prediction. I made an analogy to hurricane forecasting. Predictions should:

Can we use these criteria to evaluate political prediction models?

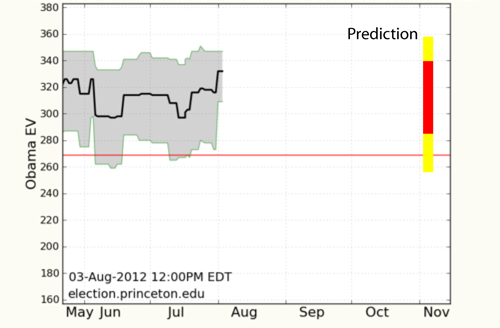

Here is my first prediction (“A real prediction for November (Take 1),” Aug. 3):

…and here we are today.

The red strike zone, which captures the middle two-thirds of predicted outcomes, has stayed in the same general place:

These numbers have one property of a good prediction: consistency. But are they right? We’ll learn more in a few weeks.

Today I propose some criteria by which to evaluate predictions. These criteria are applicable to both the Presidential race and (with modification) to downticket races as well.

This year, Presidential political predictions have come in multiple flavors:

Which of these is best – and what does “best” mean?

Here I propose five benchmarks for these different types of model. After the election, we will score as many of them as we can.

(1) Final EV outcome based on last prediction. For poll-based calculations, this should be the easy one, because Election Eve polls do so well. In past elections, the current PEC algorithm missed by 0 EV (no error) in 2004 and 1 EV in 2008. FiveThirtyEight should do well also.

However, for econometrically-based models, this test is not trivial. One well-established model by Abramowitz predicts a narrow Obama re-election, while Ray Fair’s model is on the fence. Drew Linzer’s Votamatic has stayed consistently near Obama 332 EV. In a newer model that gets reliable headlines, two Colorado political scientists think Romney will get over 330 EV. As the Dire Straits song goes: Two men say they’re Jesus. One of them must be wrong.

The benchmark: EV error.

(2) Vote share. This is a straightforward tallying, for instance of the two-candidate margin.

The benchmark: Deviations of predictions from actual outcome in the 50 states and DC are predicted on Election Eve.

(3) Long-term predictive value. Predictions are most useful if they give information far in advance. For example: on July 14th, major aggregators said Electoral-vote.com Obama 297 EV, FiveThirtyEight 302.5 EV, Princeton 312 EV, and RealClearPolitics 332 EV. If the final outcome is Obama 290 EV, then that day’s best performer would be Electoral-vote.com with an error of 7 EV.*

The benchmark: Compare final outcome with median predictions from every day of the campaign. Score using the median absolute deviation.

(4) Uncertainties. A good prediction has the essential quality of conveying what we don’t know – the known unknowns, as Donald Rumsfeld memorably put it. This is underappreciated. For example, a House model at the Monkey Cage has been pointed out to me. But its uncertainties are large, so it conveys little information about the final outcome. In this respect, a median/mean prediction is not informative on its own.

The benchmark: Compare a model’s stated uncertainties with its actual deviations from the final outcome. In principle, the average error should be about 1 sigma. To be evaluated: Princeton Election Consortium and other models that give uncertainties.

(5) Accurate probabilities. If a forecaster says the win probability is 80%, in principle he/she is saying that in five such cases, he/she will be right about four times. It is common to be accidentally underconfident. FiveThirtyEight habitually underreports confidence (or overstates uncertainty), as does InTrade.

The benchmark: Evaluation is possible if a forecaster gives win probabilities for many outcomes, for example the 50 states and DC, Senate races, and/or House races. Compare (sum of win probabilities) with (correct races called with >50% probability). If these are similar, then the probabilities are accurate.

>>>

*As we collect information from various aggregators, intentions are important. Many aggregators (us included) give a daily snapshot of the current state of the race on any given day. In those cases, benchmarks (1) and (2) should be applied to the last day’s numbers. In other cases, predictions are made explicitly, so comparison (3) is fair. And so on.

Many of the benchmarks above can also be applied, with some adjustment, to Senate and House races. After the election I’ll do my best to collect information on these benchmarks. If anyone cares to help, I’d be delighted.

Please suggest different benchmarks in comments.

Thanks to Andrew Gelman and Inbad Inbad for discussion.

Potentially there are quite a few sources to track if you include pundits. Do you want input on who to track? Do you want volunteers to help track or are you going to keep this to a scope that you can do by yourself?

It’s been done – and pundit predictions are reliably crap. See, for instance, http://www.newyorker.com/archive/2005/12/05/051205crbo_books1

I think I read somewhere in one of your articles that Democrats historically outperform Democrat presidents on the house ballot by around 0.3%. Given that in the past week Obama has gained around 2% in his margin and assuming that he is able to maintain that trend and gain another .5% what is the probability that Democrats retake the house?

Your statement is untestable given that there has never been a “Democrat president.” Do you have a hypothesis regarding Democratic presidents?

Disagree, Andrew. Our language does that.

I’m not sure I have any suggestions, but I really appreciate the tone of this post. The election prediction landscape has the tendency to act like someone calculating how long it will take to get from point A to point B. the closer you get, the easier it is to be “right”. Lots of things change from last spring, mid summer, etc, so I like that you put a long term forecast out in early August (and that your model shows considerable stability)…that said, many of the forecasts come off as predictive, when they are simply describing the race as it currently stands (polls) and predicting based on past performance. This seems entirely useful, except that it tends to sound like: “this is what usually happens in these circumstances, except when it occasionally doesn’t”. From a confidence/sigma perspective, it seems pretty clear that Obama has a great chance of winning (albeit not quite a lock).

Sorry, this doesnt really say anything useful other than a buried thanks for great work. I love data, and if the model i was building released a slow trickle (that becomes a deluge) of near-daily data, I’d tweak my model too.

Hi, I enjoy your site. I don’t normally comment. John Sides says that the electorate a;ways blames the incumbent for natural disasters & expresses that blame at the ballot box. He says this can sometimes be avoided by declaring the state a natural disaster area. Most likely swing states affected would be VA, NH & possibly part of OH. Since he has a lead in PA, he may be ok there. He has a graph showing the possible affect.

This is the saddest thing I’ve ever heard. Just incredible he could lose over this.

I have a feeling Obama will do what is needed for the affected areas and gain good press. Don’t count on another Kanye West moment.

For one thing, the affected area includes Washington, D.C. This isn’t a remote thing for him.

If he declares those states affected, especially Va and Pa, natural disasters, they automatically get funds. That helps him. Early voting in NC and Maryland are affected, but the 3 counties that NC closed for early voting this weekend are Red. Maryland will expand early voting on Friday to cover for M0nday. Pa, Ny and the rest have no early voting. Obama looks Presidential handling this, he gets positive views. My only concern is, with power outages, will this affect voting on election day. Apparently, states claim they have disaster operations in affect.

I believe that you misunderstood Sides’ point. He says that “incumbent presidents can be punished for natural disasters” but that doesn’t mean always and will. He goes on to say that declaring a disaster area in the wake of a natural disaster can help an incumbent. In fact, according to the research he cites, the benefit to the president of declaring a disaster area outweighs the negative of all but the most extreme damage. Assuming an adequate response, this is unlikely to hurt Obama and may actually help him – provided the damage isn’t so severe that people are unable to vote.

Seems to me that (2) and (5) are far and away the most important, from a statistical point of view.

I’m interested in #3 personally. After the election I’m thinking of taking results in heavily polled states and using the averages to see what pollsters, if any, were able to hover around the final result, and at what date. Calling an election on the eve of the contest is likely betting on a baseball game in the ninth inning.

Yes, I think #5 is very important, since most of us rely on these sites to give us long-term probabilities. I have always had the gut feeling that Nate Silver, for all his strengths, understates his confidence, and to be honest, his public appearances seem to suggest that he believes the same.

However, I’d like to see Prof. Wang’s numbers on this, because I’m too lazy to do the same!

I agree with Craig. #3 is numero uno.

–bks

I think #3 is the most interesting in the big-picture sense — though I think that over the long term we may find that none of the predictions are particularly good.

I think to make it truly distinct from #1 it would make sense to look at 2-month, 4-month and 6-month out bench marks.

Thanks Sam for your work! Great idea to make these comparisons. Very scientific of you 🙂

Those Colorado professors created that model this year saying it ‘back-predicted’ past elections and thus can predict this one.

I’m no professor, but that sounds awfully unacademic.

Describing past elections is the absolute minimum of competence when it comes to building a model.

Don’t think I worded that quite as I wanted after reading your response.

I meant to say that they fiddled with this model until it gave the results for all the past elections. Essentially, they didn’t create a model that you could input data and interpret, they created a model that interpreted specific data sets in a specific way. They took very specific information and extrapolated from there instead of working from a very broad data sample.

And of course they come away with a model that predicts New Mexico going Republican.

Not just New Mexico, but Minnesota too. I have no idea who will win but I have a hard time seeing Minnesota going Republican.

The term you’re looking for is “curve fitting.”

http://en.wikipedia.org/wiki/Curve_fitting

@JamesinCA

Yes I was, thank you!

Sam,

Perhaps Im reading it wrong for 2004. However, it appeared that using decided voters PEC was dead on with the Bush win. When you attributed the undecideds to Kerry giving him 1.5% thats where PEC was pretty far off. Is that correcT?

That is the case, I believe it’s in the FAQ somewhere.

For (3): “Compare final outcome with median predictions from every day of the campaign. Score using the median absolute deviation.”

In this case, shouldn’t the mean, rather than median, be relevant? For example, if two aggregators each gave 181 daily projections, and one of them was off by 100EVs 90 times, 1 EV once, and 0 EV 90 times (mean absolute deviation of about 50, median absolute deviation of 1), shouldn’t he lose badly to someone who was off by 2 EVs 90 times, 1 EV once, and 0 EV 90 times (mean absolute deviation of 1, median absolute deviation of 1)?

Please also compare performance against unskewedpolls.com (just for fun).

I don’t know about the rest of you, but I’m planning to send those profs in Colo. a “better luck next time” email after the election. Any model that forsees a Romney victory in New Mexico (!) is inevitably, ineluctably, irredeemably flawed.

For fun reading, google “Nate silver models failed” and click the second link for a recent history of econometric modeling and how’ve they’ve performed.

I may have given it away in the search string.

Anyone seen this? They made a prediction of an Obama win pre-first debate, but of course Romney looked dead in the water then.

http://colleyrankings.com/election2012/

If a model is based on polls, and the polls are wrong, but the model gets it right are the polls really relevant?

Lets look at Wisconsin for example: http://hwav.net/matchups.aspx?id=330&candidate=37,109

There have been 61 polls taken in Wisconsin and Romney has led or been tied in just 4. Obama carried the state by double digits in 2008; Kerry won by .5% in 2004.

If a Wisconsin model takes in “poll data” as an argument and does not arrive at “Obama is going to win” what is the modeling doing that ignores the poll data to such a degree.

A lot of the aggregators basically perform linear transformations with the poll data; if the data is wrong, the result is wrong. I don’t think you would want it to work any other way?

Lets look at another example; the Nebraska Senate race: http://hwav.net/matchups.aspx?ID=394

Kerrey (D) is trailing and has trailed, but its entirely possible that he could make up the ~5% difference between now and election day. The only way that could be predicted is if the poll data was supplemented with some other information; at that point though is the poll data really relevant as the other information, whatever it may be, clearly dominates the model.

I’m not aware of any models that use demographic data from the polls themselves coupled with demographic trends within a given state.

I guess my point is that if the polls are wrong, and a model is based on poll data, the model should be wrong too. Otherwise its not really a model that relies on poll data. Nobody should be rewarded for accidentally getting it correct.

Something like a simple moving average of poll data is going to be able to predict a winner in most elections. I remember looking at electoral-vote.com in 2004 and it was pretty much correct using some variation of a windowed average.

I think there needs to be some baseline, some rudimentary math baseline, that sort of says, “yes, your model works, but its way too complicated” given the performance relative to a simple calculation.

Another thing that I think is ignored is the quality of poll data used. If your data comes from RCP you are missing polls and will be getting an oversample of Ras and PPP. If you are using Pollster/TPM, you are getting more but still missing some.

By the way, maybe I misunderstood this: “Compare final outcome with median predictions from every day of the campaign. Score using the median absolute deviation.”

Do you mean: for each day for each aggregator, check that aggregator’s prediction against the final outcome? If so, I think it would be more accurate to say: “Compare final outcome with *the prediction from each* day of the campaign.

Or, maybe you mean, calculate the median projection of all of an aggregator’s daily projections, and compare that to the final outcome. This seems to unfairly benefit the wild fluctuators. I think I learn more from someone who is steadily off by +5 EVs compared to the final result than from someone who varies wildly from +150EV error to -150EV error throughout the race, but happens to have a median that is a 0 EV error.

And more precisely: what values would you take as “predictions”? I suppose for your site, you would take the final Nov.6 prediction (not the EV estimator value of that day), and for example for 538.com, you would take the November 6 forecast (not the Now-cast). Correct?

I see that this might be tricky to implement, because more sites only provide a current state of the race, similar to Silver’s now-cast or your EV estimator. Votamatic for example, only gives a current estimator, as far as i understand, like most of aggregators.

Pat: Actually, Votamatic is one of the ones that does a prediction. They use a model to adjust poll results to predict what those polls will be by the final day. That’s why their prediction has been so steady at 332.

They reduce the influence of that prior over time so that toward the end, they are basically a poll-only model.

Sam, you might add a sub-point for 5 for those models which care to predict the correlations in their errors… they should be able to expressly calculate the probability of every unique outcome. So for instance if someone believes that the deviations from the polling in the presidential race in a state will likely correlate with the deviation from the polls in the senate race in the same state.. such a prediction could both accurately say that they think the win probability is 70/30 +D for both races, though they might believe a democratic win in both races might be more probable than the 49% implied by your benchmark. It seems one should have a space to reward/punish those that care to predict the correlations.

I’m pretty naive about the math, but as to “In principle, the average error should be about 1 sigma,”, I would have thought it should be about 0.8 sigma (0.7979). Am I misunderstanding something basic? (Probably.)

Eric, your math is right. I think that was just a simplification. Here is a fun, provocative Taleb paper on that exact issue: (http://www-stat.wharton.upenn.edu/~steele/Courses/434/434Context/Volatility/ConfusedVolatility.pdf)

I commend this fine bit of nerdery. I should have said root-mean-square or done as you suggest.

bien merci Peter D

that is a wonderful read.

😉

Here is what I want to see from a presimodel. First, an early prediction of the likely winner (electoral college, of course). For late predictions, I think focusing on EV predictions is too easy. I want to see a prediction for all 58 electoral outcomes (48 winner take all states + DC + 4 NE outcomes + 5 ME outcomes).

I would be impressed with a model that puts all the outcomes in the correct order, from most red to most blue, even if it missed the EV vote outcome. For example, in 2008 I predicted the correct result of every electoral college contest except Missouri. But Missouri was the closest outcome, by far. I felt that this was a slightly more accurate prediction than Dr. Wang’s because he had the advantage of Missouri = North Carolina in the electoral college. Of course the lack of polling in NE made it impossible for him to predict that electoral vote.

In case anyone is wondering, I think you should put your money on Dr. Wang’s forecast (or Drew Linzer’s at votamatic.org) rather than mine. I have been too busy to put much effort into making a prediction. Just to get mine on the record:

I think Linzer’s model has the correct R2B order for the states. I predict his current EV outcome ( i.e. Obama takes FL and every more Dem leaning state, Romney takes NC and below). Romney will win all of NE and Obama will win all of ME. However I think NC will be closer than FL. FWIW, Linzer’s model should have FL going to Romney soon, unless more Obama leaning polls come out.

Oops, that first line was supposed to say presidential voting model, not presimodel.

Do you post your predictions/model runs on a public accessable site?

Thanks,

M

“Presimodel” is not a bad word, though.

Regarding “expert”-based evaluations, I see no meaningful way to judge the accuracy of pundits who are operating predominantly on a subjective basis.

Even if they called the correct outcome (even to a specific degree of certainty), there is no way to know if it was skill or simple chance. The only way to grade their success rate would be to sample them repeatedly over time (or over a variety of specific races down-ticket). Think of it like a mutual fund that does astoundingly well one year, only to under-perform for the next several.

If you can quantitatively score the pundit predictions, you could test the median prediction of the pundits. I hear that this works very well when applied to poll aggregation. Then again, the center of a pile of crap is still crap.

I have a question regarding you vs. 538 (apologies if this retreads something you’ve already answered elsewhere). A few notes before I dig in:

1) I only have a basic college statistics background. I’m reasonably facile with interpreting things, but my theoretical foundation is weak, so feel free to dismiss me if I’ve missed something fundamental.

2) I’m going to ignore the “economic” portion of 538’s model for this, which I think he should have discounted to zero a month ago.

Your primary disagreement with Nate seems to be that you think he’s underconfident / he thinks you underestimate uncertainty. However, there appear to be multiple possible sources for this disagreement.

1) You disagree on the CI of the polls

2) You disagree on the possibility of systematic error in the polls not captured by CI

3) You disagree on the range of possible movement in polls between now and the election

Is it possible to clarify what the source of this disagreement is? For what it’s worth, I’m asking because you seem to be the two most credible, so I’m intrigued about the differences in methodological choices.

In essays like this one, I tend to state the bottom-line differences rather than the logical issues. If you search this site for FiveThirtyEight you can see the kind of problems I have noticed. Basically, I think he does messy analysis that throws out information, adds unnecessary factors, with effects such as blurring the odds as well as time resolution.

However, the bottom line for you is this. For purposes of public consumption he is fine. He has good intuitions and is more concerned with getting things right than with anything else.

Dan – My own impression is that in the end the disagreements boil down to your item 2: the chance of systematic error. There are many other differences in how the models are composed, but the confidence levels on 538 are smaller chiefly because Nate Silver assumes a greater chance for polls to have a systematic error (for all of the US or for some regions/demographics) than Sam Wang does.

This, by the way, may have some effect on the terms of the challenge – checking the probabilities for each of the 50 states (or rather 58 contests) is a great way to compare predictions *assuming the probabilities are independent from one another*. But if they include a systematic error component that covers many states together, there may be no way to compare predictions, on this parameter, other than waiting a few centuries until we have a sufficient number of data points.

PS: But while looking the outcomes of the presidential election in each state may suffer from this problem, Senate races should be much less affected (and there may be a way to separate out the presidential coattails effect, if any).

Damn, you’re good. Still studying.

Regarding the storm’s effect on the election, the fact that Democrats actually believe in the concept of Government probably redounds to their benefit in a situation where Government action is required.

Gov. Romney would probably suggest that private companies could handle disaster prep and recovery better

Tom, I think you are right, with this caveat: It then depends on the quality of the government response to the disaster.

People want government out of their lives — except when they want its help, which is more often than many admit to. Natural disasters, they pretty readily admit to wanting the help.

Bush II took a big public relations hit for his mediocre response to Katrina. Luckily for him, 2005 wasn’t an election year. I agree with you that Obama should benefit this year (getting ghoulish here, aren’t we), provided that the government’s response is speedy and effective.

Ohio Voter, he recommended that exact step last summer (2011 Republican primary debates): thinkprogress.org/climate/2011/06/14/244973/mitt-romney-federal-disaster-relief-for-tornado-and-flood-victims-is-immoral-makes-no-sense-at-all/

I would really love someone to ask him about that this week.

“There are no atheists in foxholes”

attrib: Reverend William T. Cummings

People of all political persuations go for federal funds after a hurricane. I know this because I lived in Florida for 18 years, and everyone was a fan post-storms.

It will be very interesting to see how carefully Romney treads on this issue. I would think the natural inclination (especially if still behind in swing state polls) might be to try to pile on criticism of any potential deficiency in an official response a la Katrina, but I also think there is a huge risk factor in being perceived to be again trying to politicize human tragedy.

orchidmantis: that is a great find! That needs to get into the right hands.

Sam, I think the only way to fairly measure performance would be to do #2: matching each state’s projected score against the actual result. 538, your site, and others all aggregate polls to some extent and this measure would present the “most accurate” aggregating method, whether it be median-based or something more aggressive like Nate Silver’s.

@Ross C -Think progress is leading with it even as we speak. Maybe someone in the less “partisan” media will run with it.

Does anyone have any thoughts on how this storm could affect voting in the Philadelphia area? If there is a sustained power loss, it will be harder to get people to the polls there and could tilt the election to Romney.

Depends upon paper or electronic ballots. This is another strike against electronic voting.

From my experiance, these storms hit rural areas harder than urban ones. Power companies have (at lease in FL they did) plans to restore power to areas with

1- police & fire stations

2 – grid sections that have hospitals in them

3 – bang for the buck:

They restore areas with the most customers before sparcer ones because they dod not bill when power is out for more then x hours (48 in my Fl area)

Cities are also much less prone to long term power outages. The lines are mainly burried and the power stations are local and connected to the cities with highly wind resistant trunk lines, not wooden polls under tree cover. Suberbian areas are hit hard due to the tree growth that has been wattered with sprinklers (shallow roots since the water is in the lawn, not in the sub-soil).

It will be interesting all in all.

I have no doubt that Obama will dump huge resources to the PA area. Again, my concern is people not voting due to flooding, home damage etc.. Get out the vote operations will be affected. Republicans tend to vote even with a limb missing.

@Richard Vance. PA and VA rely heavily on electronic balloting. But again, my concern is Philly being the D’s stronghold and it getting slammed by the storm. How will that affect turnout.

If credit is given only for “correct races called with >50% probability,” then there is no downside to predicting everything at 100%. The product of the forecast probabilities has some information theory appeal.

This old guy who once worked with the Von Braun Rocket team, and thoroughly appreciates the aggregation of multiple models says thanks to Dr. Wang for the time, energy, and good humor used here. I would be near insanity looking at the talking heads and reading the opinion (facts don’t matter) pieces and would be stressed thinking the RR people would be voluntarily chosen by the USA voters.

That said I still worry about the outlier events as they are possibles, and in my experience Mr. Murphy and cast hasn’t had his real fun yet. If that rocket can malfunction, it will.. Must have plans B, C, etc in place. So I’m happy to see the President is now in Florida, even if he doesn’t carry the state he can nail down RR there and force them to burn resources.

In the meantime Sandy may be a blessing in disguise, it gives the president lots of free TV time, acting Presidential, in command and confident. He just needs to make sure that NO effort is spared in preparation and relief efforts and the PR that goes with that.

This is to answer a question someone asked about the electricity outage in Pa…

Heavily democratic Philadelphia uses a voting machine that has a back-up battery of 16 hours. That will protect Philly’s voters on election day if power is out in that County.

Philly is 1/8 of Pennsylvania total votes!!!

If Philly voting machine works during the power outages, the race goes to Obama, period.

If you’re in Pa, just ask the city hall to make sure the machine is charged up.

John Sides is full of it. What matters is how a leader reacts to a natural disaster. Screw things up like Bush and people will blame you. Deal with it in an effective rational way and people will call it leadership.

Our First Selectman (sort of like a mayor for those of you not from New England and who are unfamiliar with the Town Meeting form of government) has gotten very high marks for how he has dealt with a series of storms including floods, a hurricane and last year’s October snow storm. He uses Facebook, twitter and any other method that works to communicate what’s happening to residents. And he’s been proactive in getting his emergency management team in place.

After last year’s snow storm we had the power outages mapped and crews at work before a lot of other town’s in Connecticut even knew where their outages were. And now he’s been at work since before last Friday getting ready for this current event. He’s gotten high marks for how he’s tackled these storms, even from his critics.

It’s not the natural disaster, it’s how you handle it.

Suggested clarification: you can’t do much to evaluate #4 and #5 (uncertainty and outcome probability) and for just a single outcome,i.e. the overall EV count or popular vote margin. We probably want to look at all state outcomes when evaluating them.

That’s just for the election-eve predictions, however. Is it possible to evaluate error on #3 (long-term prediction) using just a single race? It seems reasonable to assume that there is a probability of some overall shift in a race across all thates; in fact, most of the shift between, say, July 15 and election day is correlated. A model should give odds on that correlated shift.

How do you evaluate one model against another on that front for only one race? I’m not sure you can.

First of all, thank you Sam for the great site and for coming up with the meta margin. Undoubtedly the most elegant way to simply show state of the race.

I actually think #3 is the least interesting of the metrics. An accurate estimate 4-5 months in advance basically means the campaigns, or what the candidates say and do, don’t matter at all. A tempting thought, but not a strong assumption. Or in other words, if your 4 month old prediction turns out to be ultimately accurate it’s because the campaigns were unsuccessful in changing public opinion. If one candidate or campaign proved to be especially compelling, or if something external happened (terror attack, let’s say) that wouldn’t mean your model wasn’t good – it means things actually changed.

So I think the thing that matters most is #1 and #5. The first indicates ultimate accuracy. The second is the validity of the modeling.

I’d be quite happy to find that no method was very good at #3 — I like to think that campaigns matter. But if people are going to make prediction based on economic factors, I’d like to understand how well they do compared to polling ones.

“An accurate estimate 4-5 months in advance basically means the campaigns, or what the candidates say and do, don’t matter at all.”

This is an interesting point. However, rather than the campaigns not mattering, it means that the impact of a campaigns are predictible.

And the campaign has been going on for 2-4 years. Since there is effectively no legislative activity in the 4 months prior to an election, then there really isn’t much new information available for the electorate.

If one were to choose to call the last 4 months the campaign and the prior 3 2/3 years governing, then it seems appropriate that the impact of 4 months is small.

That there is such dearth of Republic Party participants on this phenomenal statistic mecca confirms that very few of them desire to intellectually engage; that is, to render and disect political/electoral information honestly, impartially and empirically in teleological thesis of some predictive accord — even with “enemies” of their persuasions.

What I DO find is hordes of very angry, relatively uneducated (judging from even the most rudimentary assessment of the writing), unconsciously inculcated, puerile, socially vile, and emotionally disorganized/dysfunctional partisan pit bulls assiduously mauling each other without civility or merit. I am uncertain if it is a cultural defect or a conglomeration of like-minded, regressive-thinking, implaccably rigid, and extremely inflexible in any capacity Republic Party rabble oozing racism, abject hatred, misogyny, religious fundamentalism/literalism, and a glaring lack of anything approaching empathy or intellegent compassion.

There are a plethora of DemocratIC incorrigibles, too — but the nature of the insults or hubris is much less vicious; perhaps that is because it is a known quality that tolerance begets a kinder disposition because it is inclusive of the “47%” and far more tolerant of sociocultural differences.

I unfurl screed by way of getting to my point on polling, predictive qualities, methodologies, and the prostitution of “analysis” by party affiliation. I s’pose that if my candidate was clearly objectively trailing according to the preponderance of educated, factually rhadamanthine, scholarly, impartial and self-examining sources of predictive enterprises — such as this one, 538, etc — perhaps I would be unable to stomach the truest possible interpretation of political reality, too. It seems almost a moribund process, interpreting with attention to verity, researching with a dialectical and critical filter, and somehow allowing for enough comity that polling might be shared and understood as NOT a manipulation of reality by both parties.

But clearly the Republic Party cannot stray a single canon from the unscientific, magical-thinking precepts that global warming is a hoax, the myriad offensive assertions about women & their reproductive authority, the age of the earth, etc. The professional, exorbitantly paid media agitators that dissemble these people into rabelaisian hordes of enmity are a great part of this and have no incentive to access their humanity. This is too bad because there is one America but with two antebellum sections, unfortunately — in fact, these prejudicial “legacies” of the stereotypical religious orthodox, the social luddites, the intolerance of other lifestyles and races, etc — it is these very introjected character flaws that subject them to being leashed to the shameful manipulation of such a few wealthy people profiting from it.

So I guess I will live out the rest of my days with a 4th Estate that abets the frenzy over polling hucksters like Gravis, Rasmussen, Gallup, etc. I will have to watch our country’s denizens writhe and grind out hatreds because the lingua franca of politics is increasingly intransigence. I will have to listen/watch/read the breathless exuberance every single poll engenders even though the bulk of these are national snapshot or partisan-tendentious detritus in a sea of others. Hopefully, Mr. Wang and Mr. Silver and their like-minded colleagues will continue to provide a statistical/analytical oasis of intellectual comport and actual information that eschews the political invidiousness. Thank you for sites like this!

P.S. Well struck, Mr. Sabl. The Republic Party has been coopting and perverting language for framing purposes with ever-increasing shamelessness. That they cannot even summon the integrity, respect and civility to address the DemocratIC Party with the proper nomenclature mirrors their contemp for anything and anybody not like them — and therefore subject to their reality manipulations.

So I have taken to calling these extremists The Republic Party; and in a sense, it is indeed true that the current amalgamation of social troglydites and religious literalists is NOT the Republican Party. My parents were both Republicans and I remember them arguing politics with Democratic friends — but it was understood that politics was an important framing of existential analysis by our country — not an internecine massacre of any common ground. There are very few Republicans left in the Republic Party — and the ones that are are being ideologically purged in preference of an ever-crazier representative.

But the point you made in your humorous rejoinder is one that is keenly palpatable in most Democratic friends that I know. Good work!

An “ic” for an “ic”, I say: if the center-right party is to be called “Democrat” then the far-right party is “Republan”.

Right now, the storm is having little discernible effect on the presidential race; but if it’s as devastating to large portions of Americans (and I hope not), I believe it will largely benefit our President by confirming his intelligence, compassion, empathy and belief in the value of federal programs. On the other hand, I think it will highlight Romney’s slippery, technocrat identity and the pettiness/crassness of the Republican Party. In a time of extreme crisis, would you rather have Obama/Biden at the helm or Romney/Ryan?!

@jonathan: no it more likely means both candidates and campaigns have done a nearly equally good job. Generally speaking both sides have lots of money and professionals trying their damnedest. What they do or say matters, in that they can either get run over or fight to the end. Think what would have happened to Romney had he lost the first debate. … It would have been a blow out. Generally, you can count on that not happening. Btw, I believe Nate Silver had a post on your question a couple of months ago…

I would view 1 & 5 as important. Would not care about the other three.

I look forward to post-election summary of how the various models have done this time….the ten or so most notable including the CO model that states Romney will win PA; what i would also like to see if whether/how each modeler decides to alter his model for the next election.

I would also be interested to see the a comparison of #5 for Senate/house races vs. presidential – i would assume greater error given the fewer number of polls for House races, but curious by how much.

About #3, I feel that electoral politics is not an area where a really long term guess is very predictive, even if it DOES end up being correct. Because we are not talking about an enclosed system that we understand and can make reasonable assumptions about how it will perform. If the polls showed a +2 for Obama in May but North Korea launched a nuke on South Korea in August and the race turned Romney+10 the May estimate has not been invalidated, it was a good guess at the time, but the chaotic nature of the world intervened. I like what you are doing here but the success or failure of a long term call does not really prove much other than that a lot of people made up their minds a long time ago and nothing in the news and campaigning changed that much. That could easily prove untrue next cycle, or next week.

Politico natl. poll, 3 pt shift to O since debate 2 and 3. O leads 49/48.

http://www.politico.com/polls/politico-george-washington-university-battleground-poll.html

Dr Wang, in addition to the five benchmarks you list, I am concerned with a sixth — call it robustness of prediction, if you will. And let me stick with your PEC prediction and methodology, which is wonderfully transparent and has a great track record.

My question is this:

(6) What type/amount of deviation between results and prediction may lead us to conclude that cheating has occurred?

It would be nice to lay out the statistical case, BEFORE the election happened, of “This is what we would expect to see if someone were to play shenanigans with the voting machines.”

I love how on Morning Blow they hype the OH poll showing the race tied without a mention of the three polls conducted AFTER that one that have come out in the past two days. Two of which are O+5 and one O+4. The MM will hype this as a horserace all the way til the end

Can anyone explain to me why the Meta-Margin has crept upward over the past 36 hours or so? Much as I am buoyed by this, I do NOT want to get false hopes that the election is truly moving in the direction that I favor!!

Some favorable polls from VA, OH, FL and NH. Check out pollster. There was really only one poor poll in that time frame. The MM is selling a horserace but the polls are showing a drift toward O

Basically–lots of favorable state polling for the President over the weekend. Expanding leads in Wisconsin, Michigan, etc., steady consistent polling in Ohio, a tie in Virginia, and taking a lead in New Hampshire have all contributed greatly.

The MM rose when a handful of polls were released yesterday (in OH, VA, and FL) that have Obama ahead of Romney, from 1 to 4 percenteg points. You can click on those states in the Power of Your Vote table in the right-hand sidebar and see Pollster’s latest polls.

Here’s what changed from 8pm last night to 8am this morning to move the MM from 2.14 to 2.20:

NH: O+3 => O+2.5

FL: R+1.5 => R+0.5

In weather and climate forecasting we also are concerned about reliability, or the ability of a forecast system to reproduce the expected weather *statistics*. (There’s probably a more standard term out of our field–we have a tendency to rename standards).

The idea is that any probabilistic forecast should, over time, have its *forecast* distribution match the *actual* distribution. To test this requires a large database of forecast opportunities, which is fairly easy for weather but somewhat more difficult for climate, especially for forecasts over longer time periods. [I do decadal forecasts, which means we’ve had only a handful of forecast opportunities.]

Given the few number of elections, how do you address this?

Excellent question. In election models, the answer is we can’t. The number of forecasting opportunities you need to verify your model is about the same as the number of historical events you need to calibrate your model.

The typical rule of thumb in any statistical modeling is you need many more calibration opportunities than the number of random variables in your model (say ten times more).

If you have a model with 10 variables, this means you need 100 “elections”, or more than is available (even if this country had 1000 years of history, old data becomes less and less suitable for calibration and should be used carefully.)

Much more important than an ingenious model is having enough data to make the model meaningful.

Beware of correlation models that use a large number of variables simply because that gives them a high r^2!

I had remembered reading about that forecast from the University of Colorado researches that has predicted a significant Romney victory a month or so ago, but I haven’t found it since then. Does anyone have a link to this model?

You can find their paper in their web sites (Kenneth Bickers of CU-Boulder and Michael Berry of CU Denver)

I read their paper carefully, and this is what I can tell you. They do a multi-dimensional regression (about 12 parameters if I remember correctly), and get an r^2 of about 0.85.

HOWEVER, when you consider their number of dimensions AND the very short history for validation, the regression uncertainty must be very significant indeed. Their “backtesting” appears to have been done WITHIN the calibration sample, thereby rendering it basically invalid.

Their model does not include what appears to be the most important categorical variable so far this season: First debate performance.

I wrote them asking relevant technical questions and my emails were ignored.

I am a reader of the538, but have found myself growing frustrated with it. I find that Nate’s attempt to “score” the impact of economic data and include it in his model dilutes his assumptions.

The impact of any given economic data point would eventually be reflected in the polling data, correct? So, in essence, Nate Silver is counting this impact twice.

He includes both separately because he views both as explanatory variables (true, polls incorporate economic data, but not to the extent to make economic data superfluous.)

There is no harm in double counting, however. If you add superfluous variables to a model, calibration takes care of their being redundant and the results don’t get “inflated” as a result.

My concern about 538 is the lack of data to make meaningful calibrations – they don’t reveal the details of their model, but the more variables you keep, the more (much much more) observations you need!

Dr. Wang, thanks for clearly setting out the benchmarks to compare performance of different predictive models.

Generically speaking, one basic test that every model should be able to pass is the ability to beat a naive forecast.

Is it possible to establish what that naive forecast would be in this type of situation, considering that there is no “actual” value for the previous month?

I’m not sure if this naive forecast test is relevant in the case of an election, but it’s not clear that the various models being published are meaningfully better than a naive forecast.

In this case, is it feasible to establish a proxy for the previous period’s “actual” value?

I’m curious as to why Rumsfeld is frequently attributed with “unknow unknowns”. I heard that expression decades before he said it.